In a recent blog, we spoke about how artificial intelligence (AI) can do tasks that people would otherwise do. Substituting a tool for a person can be an effective way of chomping through repetitive and relatively unsophisticated jobs. Giving people AI-powered tools can help them be more productive. They don’t take jobs away directly, but they make tasks easier.

However, any opportunity carries risk and so how do you mitigate that, so you can capitalise on the opportunity? You need a framework and a set of policies to govern the way you work.

There are three questions we need to ask ourselves when testing any solution that contains AI:

- Is it legal and can it pass regulatory scrutiny?

- Can I trust it to meet the expectations we set?

- What reasonable use policy do I need to wrap around it?

If we don’t ask these questions, or we arrive at incorrect conclusions, then we are exposed to safety risks and costs. We may promise gold and deliver mud. We risk implementing a tool that reduces staff and service user satisfaction, rather than increasing it.

A framework for creating principles and policies that enable AI to land safely

The answer to question 1 requires legal expertise and your vendor should be able to provide assurances, for example via their clinical safety assessments if it is a clinical solution. However, the answers to questions 2 and 3 are more complex and require the application of a new approach to defining projects. This should start with establishing a set of goals for the use of AI. These can be enshrined in principles and then used to create specific policies that can be applied to particular use cases.

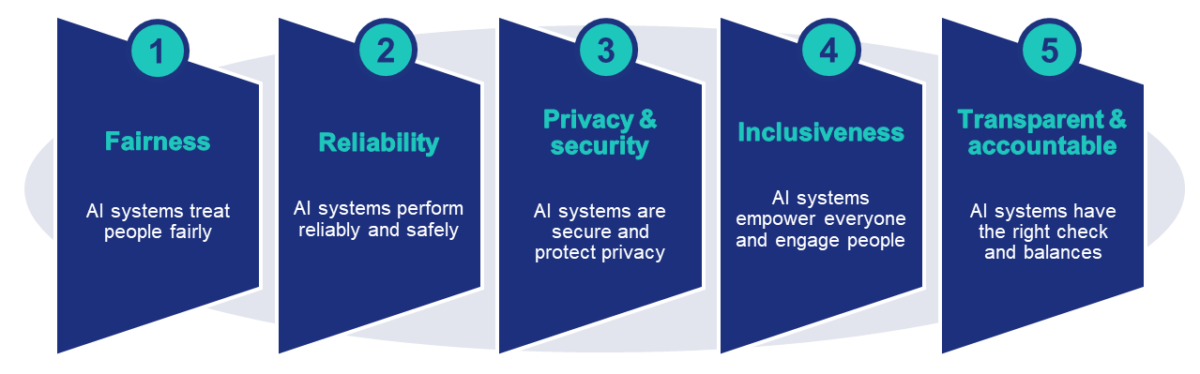

Tom Lawry’s seminal book, AI in Health (HIMSS Publishing; 1st edition, February 2020), provides a great starting position to understand potential goals for your organisation:

By setting goals, you’ll be well on the way to creating trust amongst staff and service users in the appropriate use of AI to support better health and care; and you’ll be able to start answering the following types of questions:

How do we ensure fair and equitable treatment of staff and service users? How do we ensure the safety and effectiveness of interactions with AI-powered services?

Will my AI-based system(s) operate reliably, safely and consistently, even when under cyber-attack? Are they designed to operate within a clear set of parameters under expected performance conditions?

How do we control and process data and protect sensitive data from improper use? How do we ensure the provenance of data models? How do we protect the computing estate?

How do AI-tools and people work together? How do we avoid digital inequality? How do we avoid intentional or unintentional exclusion? How do we ensure AI is creating a net-benefit across multiple measures? What is the impact on staff (for example wellbeing)?

How do we govern AI-powered work? What can/can’t we do? What checks and balances need to be in place? What certifications do we and our vendors need?

These are all solid questions, but how do you bake the answers into a set AI policy for your organisation?

The five key components for a successful AI policy

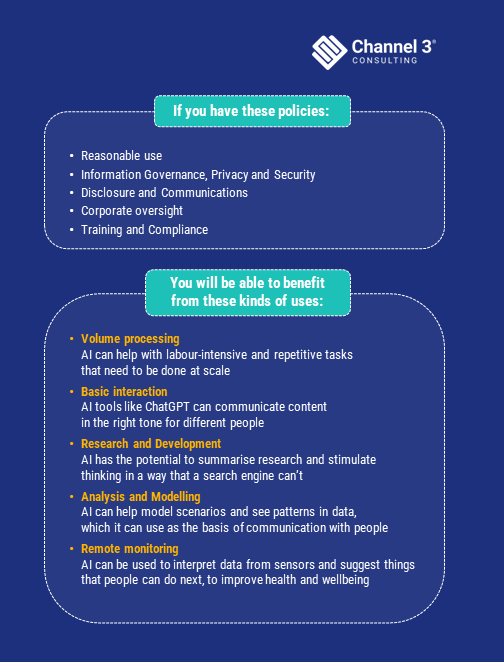

Your AI policy must have the five following components if it’s going to be applied in the sorts of use cases listed above.

- It must explain what AI can and can’t be used for in a reasonable use policy.

- It must underline governance, privacy, and security legislation with a set of understandable, trainable, and actionable instructions that allow compliance to be checked.

- It must satisfy citizens’ needs to understand AI and enable them to play an active role in controlling their data.

- It must allow an organisation to govern its use and demonstrate legal and ethical compliance.

- It must say how staff and patients or users of services will be trained to use the application of AI. It should recognise that the user experience of any AI-powered tools needs to be so good that we don’t need anyone to drop into our homes to train us.

If you do this first, then you will have a greater chance of landing a safe implementation of this technology. We know it has the power to change the world. But we need to land it in a safe and managed way where expectations are set, and benefits can be delivered while risks are mitigated.

We would be really interested to hear from organisations that are interested in safely deploying generative AI, to share experiences.