Before we go too much further it is worth asking ourselves what we can use the 2023 generation of AI products for and what needs to be in place to use them safely.

What is artificial intelligence (AI)?

Last month our friends at the BBC published an A-Z of Artificial Intelligence. Broadly AI falls into two camps; generative, which enables computers to mimic humans and is language-based, and predictive which uses maths to predict things like the growth rates of tumours. At some point, all of these features will converge, in the way that the brain can listen, interpret, reason and communicate or act. But probably not yet. So, what can you do now? Well, you can use AI to perform some tasks!

Can AI replace or augment staff?

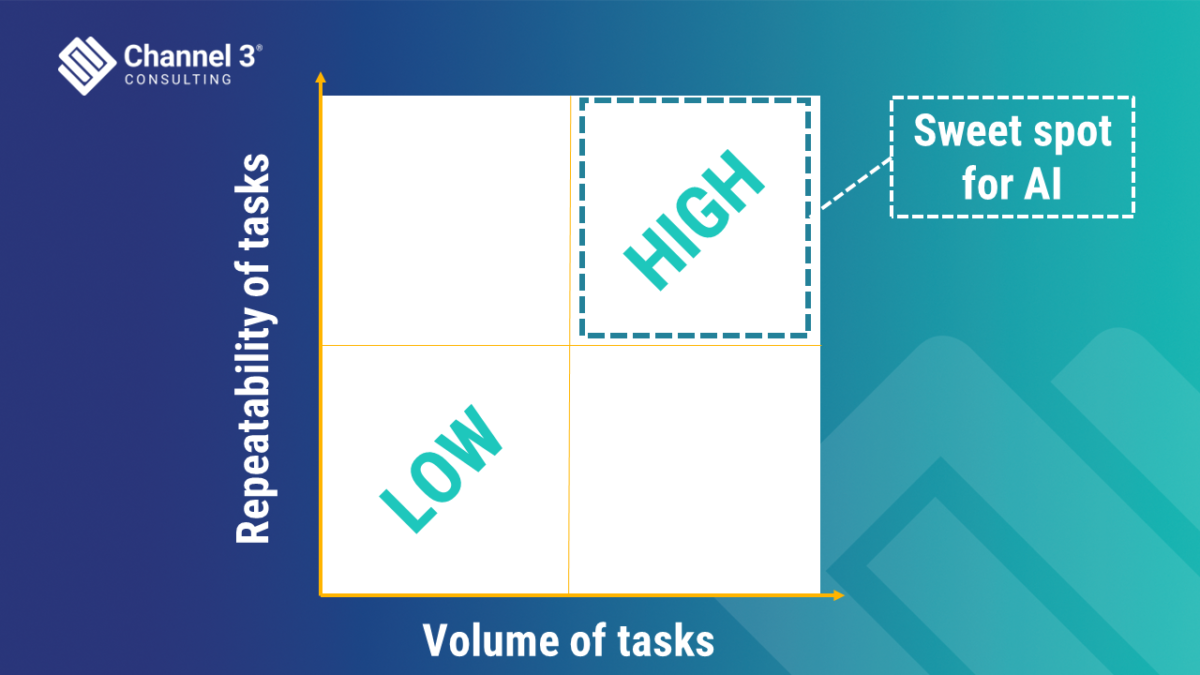

We do not have enough staff to meet the demand for health and care services. So, we are bound to ask how we can do the things that humans do, using machines to replace or augment us. Especially if the machine is indiscernible, within the boundary of what we ask it to do, from the human. The natural starting points are simple tasks that need to be done at scale. Think of the Victorian-era Industrial Revolution, when machines enabled fewer people to be more productive. In 2023, the computer is like the spinning wheel, churning through repetitive tasks. The box plot diagram below shows what this means. The further we move to the right of the box plot, the easier it is to use this year’s vintage of AI. Even being in this box, though, presents challenges and implementing AI is not straightforward.

Some organisations have made a start on AI

Some organisations are making progress with robot process automation on this basis – but it isn’t AI. The robot is following a simple routine process. It doesn’t learn. It doesn’t flex to improve the experience of the person being processed. It can’t cope with ambiguity. Generative AI (like Chat GPT) has the potential to enhance these bots because the overlaying of language models on the process means that the bot becomes capable of interacting with a person. It might not turn every patient interaction into a high-quality conversation, but it has the potential to process more interactions to the same quality as a human. This is very useful for solving some basic problems like completing empty fields in forms, checking and verifying the accuracy of data and taking people through very simple decision trees.

Is generative AI over-hyped then?

Some tasks have the potential to become repeatable at scale because of AI. Take the Predictive AI models used in diagnostic imaging which, due to them being trained and tested at scale, have the potential to speed up the reading and interpretation of images. This isn’t hype. It is happening.

The situation with generative AI is more complex, not least because the technology is a little less mature. So we need to bear with it, while it catches up, but not delay getting the benefit now. True that AI is full of bias because the data it is trained on is biased. It is also true that AI can’t currently cope with complex interactions like a human can. Further, it is true that ChatGPT is only as good as the question you ask it. All these points have been made many times and may slow the adoption of AI.

In 2023 we need to ask, though, if these are fair tests. Is this like criticising a Model T Ford for not being fast enough for Formula 1? Generative AI can’t do everything but it can do some things, so now is a good time to start experimenting and gaining the initial benefits.

So what does this mean for you? How do you balance the opportunity with the risk?

There are four questions we need to ask ourselves when testing any solution that contains AI:

Is it legal and passes regulatory scrutiny? This includes all aspects of the AI supply chain, including the data shared to train the models.

Can I trust it to meet the expectations we set?

What reasonable use policy do I need to wrap around it?

If the organisation relies on it, how will it cope if it fails?

If we don’t ask these questions or arrive at incorrect conclusions, then we are exposed to safety risks and costs. We may promise the earth and deliver mud. We risk applying a tool that creates work and reduces staff and service user satisfaction rather than increasing it.

In our next blog, we will discuss how you can answer these four questions!